Inside the Architecture of a Deep Research Agent

In the rapidly evolving landscape of AI, Retrieval-Augmented Generation (RAG) has become the go-to solution for grounding language models in factual data. It has been effective for question-answering, but for complex, exploratory tasks that demand vast data analysis and culminate in a structured narrative, we must look beyond simple RAG.

This isn’t just a theoretical challenge, it's the next step in our own product journey:

- Egnyte’s evolution in AI began by perfecting enterprise search, giving users the foundational ability to instantly and securely locate any file.

- We then took the first step into content intelligence with single-file Q&A, transforming passive documents into active sources of information.

- The next logical step was to break down information silos, allowing users to pose questions across a curated knowledge base.

- From there, we elevated the experience with a true AI agent for multi-file Q&A, which intelligently identifies relevant documents on the fly to synthesize a comprehensive answer.

This progression now culminates in a more advanced capability: the deep research agent, which moves beyond reactive Q&A to proactive task completion, autonomously discovering content, performing multi-step analysis, and generating net-new insights to become a true digital research partner.

This blog dives deep into the architecture that makes this leap possible. We will dissect the agent's multi-agent workflow, break down the methods it uses for sophisticated retrieval and analysis, and reveal the orchestration engine that brings it all to life.

What Is a Deep Research Agent and Why Do We Need It?

To understand the value of a deep research agent, we must see it as the next step in an evolutionary journey of agentic patterns at Egnyte, each designed to overcome the limitations of its predecessor.

The RAG Pattern

At its core, standard RAG is a powerful but stateless query engine. It excels at answering questions by finding relevant information and synthesizing it into an immediate response. However, its fundamental limitation is that it doesn't investigate. It can’t perform a series of actions, learn from them, and then decide on a new course. It answers one question, and its job is done.

The ReAct Pattern

The ReAct (Reasoning and Acting) framework was a major leap forward, giving a single agent a chain-of-thought and the ability to use tools in a loop. A ReAct agent can tackle multi-hop questions by reasoning about which tool to use next (Thought → Action → Observation). It can answer complex queries that require chaining several steps together.

However, even ReAct falls short when faced with a true research task:

- It's tactical, not strategic: It excels at determining the next best action but lacks the foresight to create a high-level, multi-stage project plan before it begins. It reacts to observations rather than executing a pre-meditated strategy.

- It's sequential: It operates as a single, sequential process. It can’t delegate tasks or explore multiple avenues of inquiry in parallel, making it inefficient for broad research topics.

- It lacks deep synthesis capability: Its memory is typically confined to the scratchpad of its current task. It isn't designed to hold, structure, and synthesize vast amounts of information gathered over dozens of steps to identify emergent, high-level themes.

The Deep Research Agent (The Orchestrated Team of Agents)

This is where the deep research agent comes in. It addresses the strategic and scale limitations of ReAct by moving from a single-agent model to a multi-agent, orchestrated system.

We need this new architecture to tackle a class of problems that RAG and ReAct can’t. A deep research agent is designed to:

- Move from tactics to strategy: It doesn't just react. Its first action is to plan, creating a comprehensive research strategy structured as a directed acyclic graph (DAG).

- Move from a sequential worker to a parallel team: It acts as a project manager, dispatching multiple specialist agents to investigate different parts of the plan concurrently.

- Move from a scratchpad to a structured, persisted state: It systematically collects, structures, and synthesizes information over a long-running process, enabling it to perform the kind of deep analysis that reveals high-level insights and themes.

In essence, a deep research agent is necessary when the goal is not merely to answer a question, but to conduct exploratory research and produce a comprehensive report. It's the difference between looking up a fact and writing a thesis.

The Overall Design

Before we look into each agent, here’s a summary of the technologies and patterns used:

Technology and Frameworks

- LangGraph: The orchestration framework used to create stateful agent workflows

- FastAPI: Exposes the agent's functionality to submit a research topic as an asynchronous REST API

Architectural Patterns

- Multi-agent architecture: The system is broken down into autonomous, specialized agents.

- Orchestration pattern: A central Master agent acts as the master orchestrator.

- Specialized LLM roles: The system uses different models for different tasks: a worker model for analytical tasks and a more powerful model for report generation.

- DAG for planning: The core research strategy is a DAG, allowing the system to model dependencies while identifying independent paths for parallel execution.

- Background task processing: The primary research task is executed asynchronously.

State and Persistence

The entire research workflow is based as a single, shared state, managed by LangGraph. All the agents store their input, output, and intermediate data to this shared state: the initial user query, the generated research plan (DAG), the generated analysis, and so on.

LangGraph allows persistence of this state through its checkpointer mechanism. These checkpoints are saved with a thread ID, which allows resuming the workflow after a human-in-the-loop intervention or a system fault.

An Overview of the Agents Team

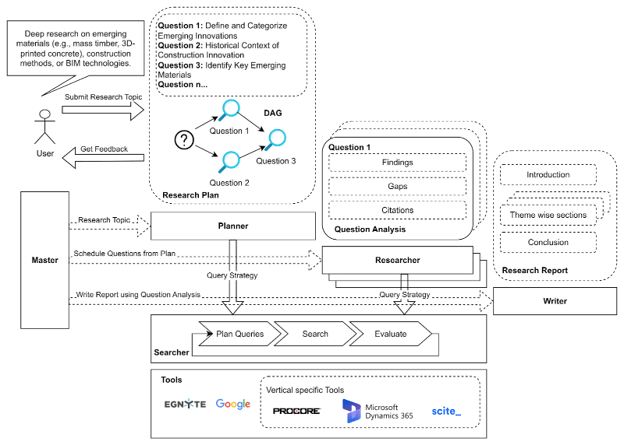

The Master

The Master Agent acts as the orchestrator, directing a team of specialized agents.

- Planning delegation: The workflow begins by delegating to the Planner Agent. It waits for the Planner to return a user-confirmed Research Plan structured as a DAG.

- The main research loop:

- Schedule: Performs a topological traversal of the research plan DAG, identifying all questions whose dependencies have been met.

- Dispatch (parallel processing): Implements a map/reduce-like pattern, running multiple Researcher Agent instances concurrently.

- Synchronize and collect: A synchronization node that collects the structured Question Analysis reports.

- Loop: The graph then cycles back to the scheduling node until all nodes in the DAG have been processed.

- Reporting delegation: The master workflow delegates the final task to the Report Writer Agent.

- Completion: After receiving the final report, the master workflow concludes.

The Information Gatherer

The Searcher Agent is the foundational, reusable component for all information retrieval. Its workflow is a search-and-refine loop:

- Search strategy: Formulates search queries as directed by the Planner and the Researcher agents.

- Execute queries and search: The portfolio of queries is executed against their designated sources. For public information, it uses a dedicated client to interface with the Google Search API, retrieving a list of relevant URLs and snippets. For internal data, it connects to Egnyte's Hybrid Search API.

- Rerank for relevance: This initial list of results is passed to a cross-encoder reranking algorithm. Unlike simple vector similarity, a cross-encoder processes the query and each document snippet together, providing a much more accurate semantic relevance score. This critical step ensures that only the most relevant results are sent to the expensive content extraction phase.

- Read, chunk, and diversify:

- Scraping: The agent takes the top-ranked URLs and uses LangChain UnstructuredURLLoader to extract content.

- Semantic chunking: The loader is configured to partition the document based on its logical elements, creating semantically coherent chunks. Chunks are enriched with metadata of the document.

- Diversification: It applies the maximal marginal relevance (MMR) algorithm to select a final set of chunks that are both relevant and informationally diverse.

- Evaluate and loop: Finally, it evaluates the accumulated context. A conditional edge in LangGraph routes the workflow back to generate new queries if more information is needed.

The Planner

The Planner Agent creates the blueprint for the entire research project, primarily using a worker LLM.

- Initial query portfolio generation: It invokes the Searcher Agent with a query strategy to generate a search context. This strategy is used by Searcher Agent to generate a broad set of initial search queries designed to establish foundational knowledge.

- Exploratory search: This portfolio of queries is used by the Searcher Agent to gather initial information and create a context.

- Synthesize analysis: The Planner Agent processes this context and generates the Topic Analysis, including the problem statement and the key research angles.

- Generate plan as a DAG: It then creates the Research Plan as a DAG. This DAG is a graph of sub-questions with dependencies, and has been planned in a top-bottom manner.

- Await confirmation (HITL): It implements the human-in-the-loop pattern by pausing the workflow to await user validation and modification of the plan.

- Updating the shared state: Finally, the Planner Agent updates the plan in the shared state, which is read by the Master on its completion.

A sample research plan

Research Topic: Deep research on emerging materials (e.g., mass timber, 3D-printed concrete), construction methods, or building information technologies (BIM).

Emerging Materials, Construction Methods, and BIM Technologies

- Define and categorize emerging innovations: What are the specific definitions and categories of 'emerging materials', 'emerging construction methods', and 'BIM technologies' that will be the focus of this research?

- Historical context of construction innovation: What is the historical context and evolution of innovation within the construction industry, and how do current emerging trends compare to past revolutionary changes?

- Identify key emerging materials: What are the most prominent emerging materials (e.g., mass timber, 3D-printed concrete, self-healing concrete, advanced composites) currently being developed and adopted in construction?

- Identify key emerging construction methods: What are the leading emerging construction methods (e.g., modular construction, prefabrication, robotics, automated construction) that are gaining traction globally?

- Characterize advancements in BIM technologies: What are the latest advancements and applications within Building Information Modeling (BIM) technologies, including digital twins, AI integration, and cloud-based platforms?

- Current adoption and geographical distribution: What are the current adoption rates, market penetration, and key geographical areas for these identified emerging materials, construction methods, and BIM technologies?

- Analyze performance and limitations of emerging materials: What are the technical performance characteristics (e.g., structural, thermal, environmental) of key emerging materials, along with their associated benefits, challenges, and limitations (e.g., cost, scalability, regulatory hurdles)?

- Evaluate efficiency and viability of emerging construction methods: How do emerging construction methods impact project efficiency (time, cost), safety, quality, and environmental footprint, and what are their economic and logistical viability challenges?

- Assess impact of Advanced BIM technologies: What is the impact of advanced BIM technologies on project lifecycle management, stakeholder collaboration, data exchange, and decision-making processes in construction projects?

- Synthesize interdependencies and future outlook: How do emerging materials, construction methods, and BIM technologies interrelate and create synergistic opportunities for the future of construction, and what are the key trends and predictions for their widespread adoption?

User feedback: Focus more on the U.S. and European markets and include research on emerging materials in these geographies.

(An example of the human-in-the-loop process for a user to provide feedback during planning for any modification and validation.)

Revised Research Plan: Emerging Materials, Construction Methods, and BIM Technologies in U.S. and Europe

- Define and categorize emerging innovations: What are the specific definitions and categories of 'emerging materials', 'emerging construction methods', and 'BIM technologies' that will be the focus of this research?

- Historical context of construction innovation: What is the historical context and evolution of innovation within the construction industry, and how do current emerging trends compare to past revolutionary changes?

- Identify key emerging materials in U.S. and Europe: What are the most prominent emerging materials (e.g., mass timber, 3D-printed concrete, self-healing concrete, advanced composites) currently being developed and adopted specifically within the U.S. and European construction markets?

- Identify key emerging construction methods in U.S. and Europe: What are the leading emerging construction methods (e.g., modular construction, prefabrication, robotics, automated construction) that are gaining traction specifically within the U.S. and European construction markets?

- Characterize advancements in BIM technologies in U.S. and Europe: What are the latest advancements and applications within Building Information Modeling (BIM) technologies, including digital twins, AI integration, and cloud-based platforms, with a focus on their uptake and relevance in the U.S. and European construction sectors?

- Current adoption and market penetration in U.S. and Europe: What are the current adoption rates, market penetration, and key geographical areas within the U.S. and Europe for these identified emerging materials, construction methods, and BIM technologies?

- Analyze performance and limitations of emerging materials in U.S. and Europe: What are the technical performance characteristics (e.g., structural, thermal, environmental) of key emerging materials, along with their associated benefits, challenges, and limitations (e.g., cost, scalability, regulatory hurdles) as they pertain to the U.S. and European construction markets?

- Evaluate efficiency and viability of emerging construction methods in U.S. and Europe: How do emerging construction methods impact project efficiency (time, cost), safety, quality, and environmental footprint, and what are their economic and logistical viability challenges within the U.S. and European regulatory and market environments?

- Assess impact of Advanced BIM technologies in U.S. and Europe: What is the impact of advanced BIM technologies on project lifecycle management, stakeholder collaboration, data exchange, and decision-making processes in construction projects, specifically considering their application and influence in the U.S. and European markets?

- Synthesize interdependencies and future outlook in U.S. and Europe: How do emerging materials, construction methods, and BIM technologies interrelate and create synergistic opportunities for the future of construction, and what are the key trends and predictions for their widespread adoption within the U.S. and European contexts?

The Researcher

The Master Agent runs multiple instances of this Researcher Agent in parallel. Its key feature is its ability to gather findings and adapt.

- Assigned question: The Researcher Agent reads its assigned question and any relevant prior findings from the state object.

- Strategic query formulation: It invokes the Searcher Agent with a query strategy to generate the search context. Searcher Agent uses this strategy to first generate queries using a multi-pronged strategy:

- Question deconstruction: It breaks down its assigned research question into logical sub-components.

- Keyword deepening: It extracts specific entities from the findings of previously answered questions to use as new search keywords.

- Gap-Driven inquiry: Most importantly, it analyzes the identified gaps field from the previous analysis. If the last step noted a weakness, the LLM is explicitly instructed to formulate new queries to fill that specific gap, creating a powerful feedback loop.

- Focused deep search: This new, intelligent set of queries are used by the Searcher Agent to search and create a context.

- Structured analysis and self-evaluation: This context is used by the Researcher Agent to synthesize the Question Analysis, containing not only its findings with citations, but also a new list of identified gaps, which will be used by the next researcher in the chain.

- Updating the shared state: Finally, the Researcher Agent puts the Question Analysis in the shared state, which is read by the Master on its completion. Question Analysis from each Researcher Agent is collected in the shared state.

The Author

The Writer Agent is the final agent, where the system switches to the more powerful LLM. Its workflow is designed for high-quality, long-form composition.

- Holistic outline generation via thematic analysis: The agent performs a meta-analysis across the entire collection of Question Analysis to identify emergent, overarching themes. This generates the key themes that become the main sections of the final report. This dynamic theme-based outline is important because new findings during the research might contradict the initial assumptions made during research planning.

- Divide and conquer for long-form generation: Instead of a single, massive prompt to write the entire report (which can lead to loss of focus or hitting context limits), the workflow uses a parallel processing pattern. It dispatches a separate writing task for each theme, allowing the high-quality LLM to produce a deeply detailed and coherent narrative for each section independently.

- Final assembly: Once the individually generated sections are complete, a final node assembles them in the correct order between the pre-written introduction and conclusion.

Conclusion and Challenges

The deep research agent architecture represents a significant evolution from simple RAG and tactical ReAct agents. By adopting agent-based design, leveraging stateful graph orchestration, and using specialized LLMs for distinct tasks, it can tackle complex research with a process that mirrors human cognition.

However, this power comes with challenges:

- Cost and latency: The numerous LLM calls make this a "heavy" process, trading speed for depth and quality.

- Error propagation: An error in an early stage (like a flawed research plan) can negatively impact all subsequent steps. Robust validation and the HITL pattern are crucial.

- Evaluation: Objectively scoring the quality of a generated research report is a difficult problem that depends on human judgment.

Despite these challenges, this architecture provides a blueprint for the future of AI-powered analysis, moving us closer to systems that can serve not just as information retrievers but also help in research and discovery.