The Case of the Phantom Date: How a Single Pixel Fooled Our Visual AI

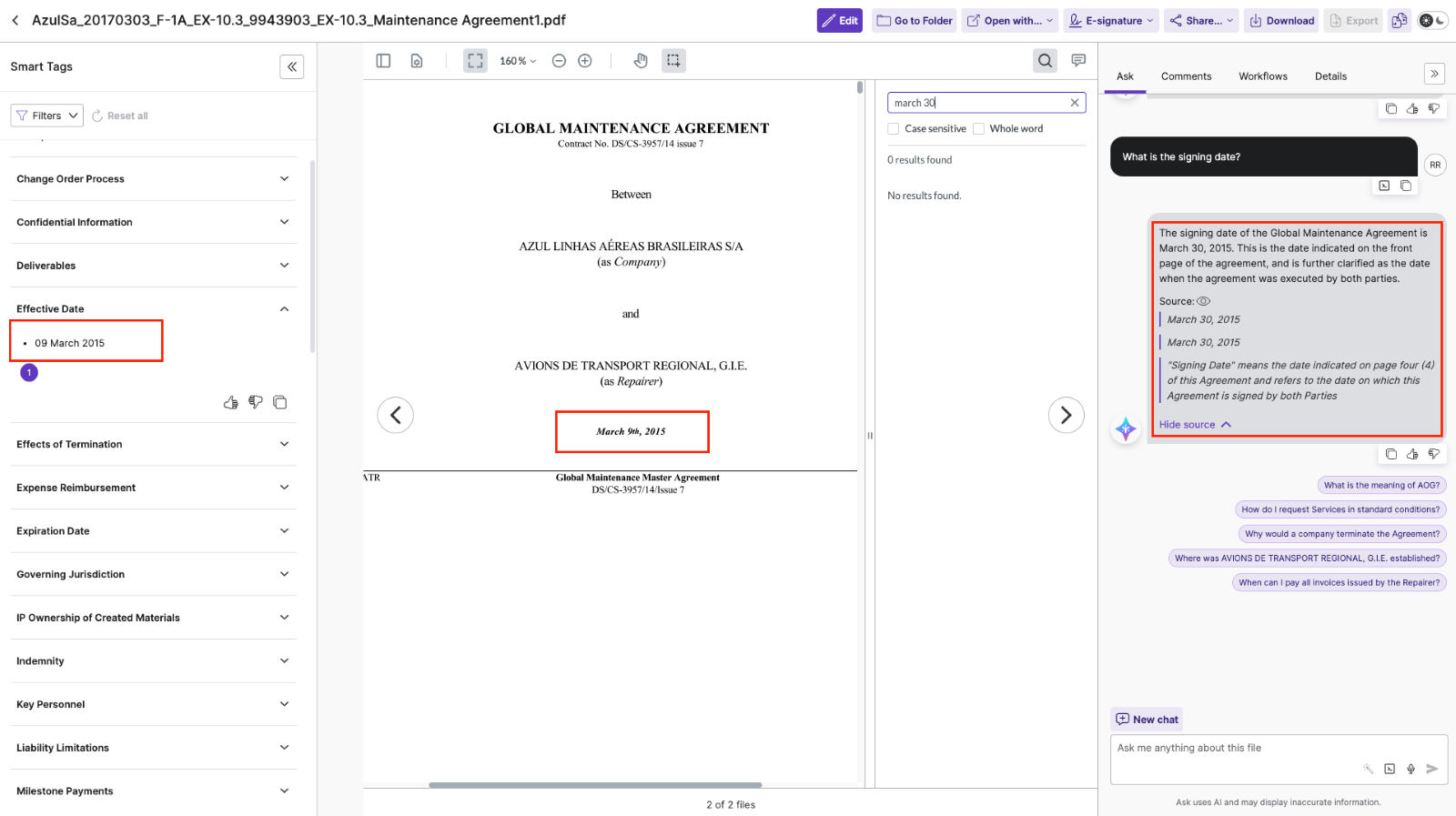

We’ve all seen it: a cutting-edge, multimodal LLM, capable of understanding complex documents, stumbles on a seemingly simple task. In our case, the model confidently reported a contract’s signing date as "March 30". The only problem? The document clearly stated "March 9th".

It wasn't just a minor error; it was a baffling one that sent us down a rabbit hole of debugging. Our journey to the root cause is a modern-day detective story that highlights a crucial lesson for anyone working with visual AI.

The Initial Investigation

Our first steps were to check the obvious. We scoured the document's text for any mention of "March 30". Nothing. We then investigated our retrieval and reranking pipeline, suspecting that perhaps the wrong page or snippet was prioritized. Again, no issues were found. The system was looking at the right page.

So, why was it hallucinating the wrong date?

Peeking Into the AI's "Mind"

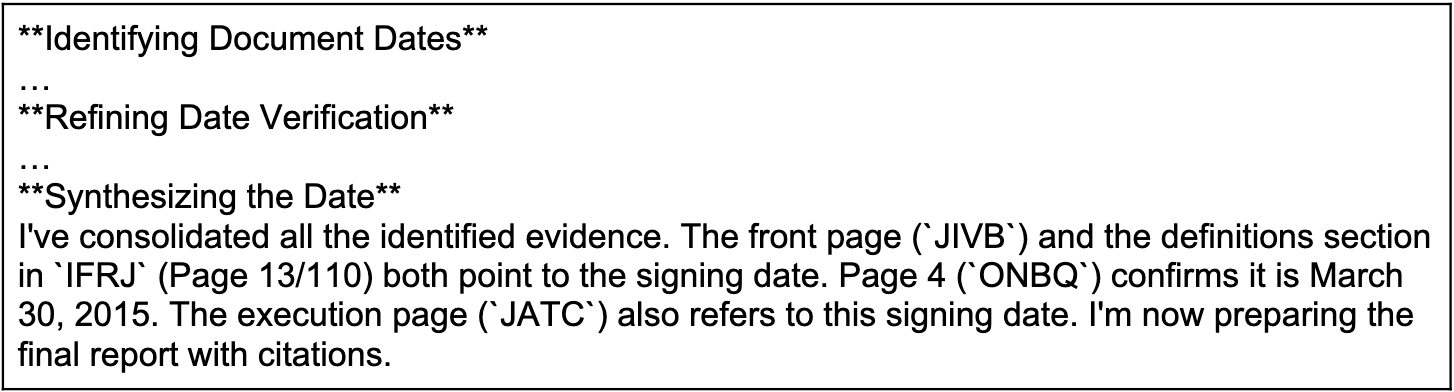

To dig deeper, we enabled the model's "thoughts"—a feature that provides a peek into its reasoning process.

The logs revealed that the AI was, in fact, confident in its sources. It correctly identified that the signing date was on the front page and cross-referenced it with other sections of the document. It even noted, "Page 4 (ONBQ) confirms it is March 30, 2015".

The model wasn't making a random guess—it genuinely "saw" the wrong date. This told us the problem wasn't in the model's logic but in its perception. The error had to be in the data being fed to it.

The "Aha!" Moment: It's All in the Pixels

Since we use a multimodal approach, the LLM receives an image of the document, not just the extracted text. We rendered the exact image payload our system sent to the model and started searching for anything that could be misinterpreted as "March 30".

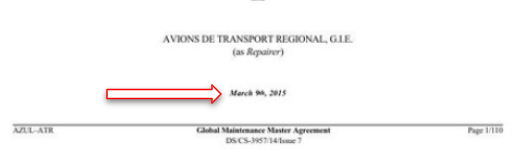

And there it was. On the cover page, the date "March 9th, 2015" was present. However, we remembered a critical detail: to optimize for performance, we downscale the images before processing.

When we looked at the low-resolution version of the image, the problem became immediately clear. The "9th" had been compressed and pixelated in such a way that it looked almost identical to a "30".

Original date: March 9th, 2015

What the AI saw: March 30, 2015

The machine wasn't failing at reasoning; it was failing at seeing. A simple image downscaling, a common and often necessary optimization, introduced a critical visual artifact that led the entire process astray.

The Simple Fix and the Lasting Lesson

The solution was as simple as the problem was subtle. We increased the scale of the image fed to the model, providing a higher-resolution input. With a clearer picture, the AI had no trouble correctly identifying the date as "March 9th".

This incident was a powerful reminder: in the age of multimodal AI, the quality of your input is paramount. We often focus on the sophistication of the model, but garbage in is still garbage out, even if that "garbage" is just a few poorly rendered pixels. Before you assume your complex model has a complex problem, it’s always worth asking: Have you checked what it’s actually looking at?